About

This second ACM C&C workshop on explainable AI for the Arts (XAIxArts) brought together a community of researchers and creative practitioners in Human-Computer Interaction (HCI), Interaction Design, AI, explainable AI (XAI), and Digital Arts to explore the role of XAI for the Arts. XAI is a core concern of Human-Centred AI and relies heavily on HCI techniques to explore how to make complex and difficult to understand AI models more understandable to people. Our first workshop explored the landscape of XAIxArts and identified emergent themes. To move the discourse on XAIxArts forward and to contribute to Human-Centred AI more broadly this workshop:

- Brought researchers together to expand the XAIxArts community;

- Collected and critically reflected on current and emerging XAIxArts practice;

- Co-developed a manifesto for XAIxArts;

- Co-developed a proposal for an edited book on XAIxArts;

- Engaged with the wider discourse on Human-Centred AI.

Workshop Date: Sunday, 23rd June, 2024

Venue: Hybrid (Chicago, IL, USA and Online).

Archived Workshop Description

Nick Bryan-Kinns, Corey Ford, Shuoyang Zheng, Helen Kennedy, Alan Chamberlain, Makayla Lewis, Drew Hemment, Zijin Li, Qiong Wu, Lanxi Xiao, Gus Xia, Jeba Rezwana, Michael Clemens, and Gabriel Vigliensoni. 2024. Explainable AI for the Arts 2 (XAIxArts2). In Proceedings of the 16th Conference on Creativity & Cognition (C&C ‘24). Association for Computing Machinery, New York, NY, USA, 86–92. https://doi.org/10.1145/3635636.3660763

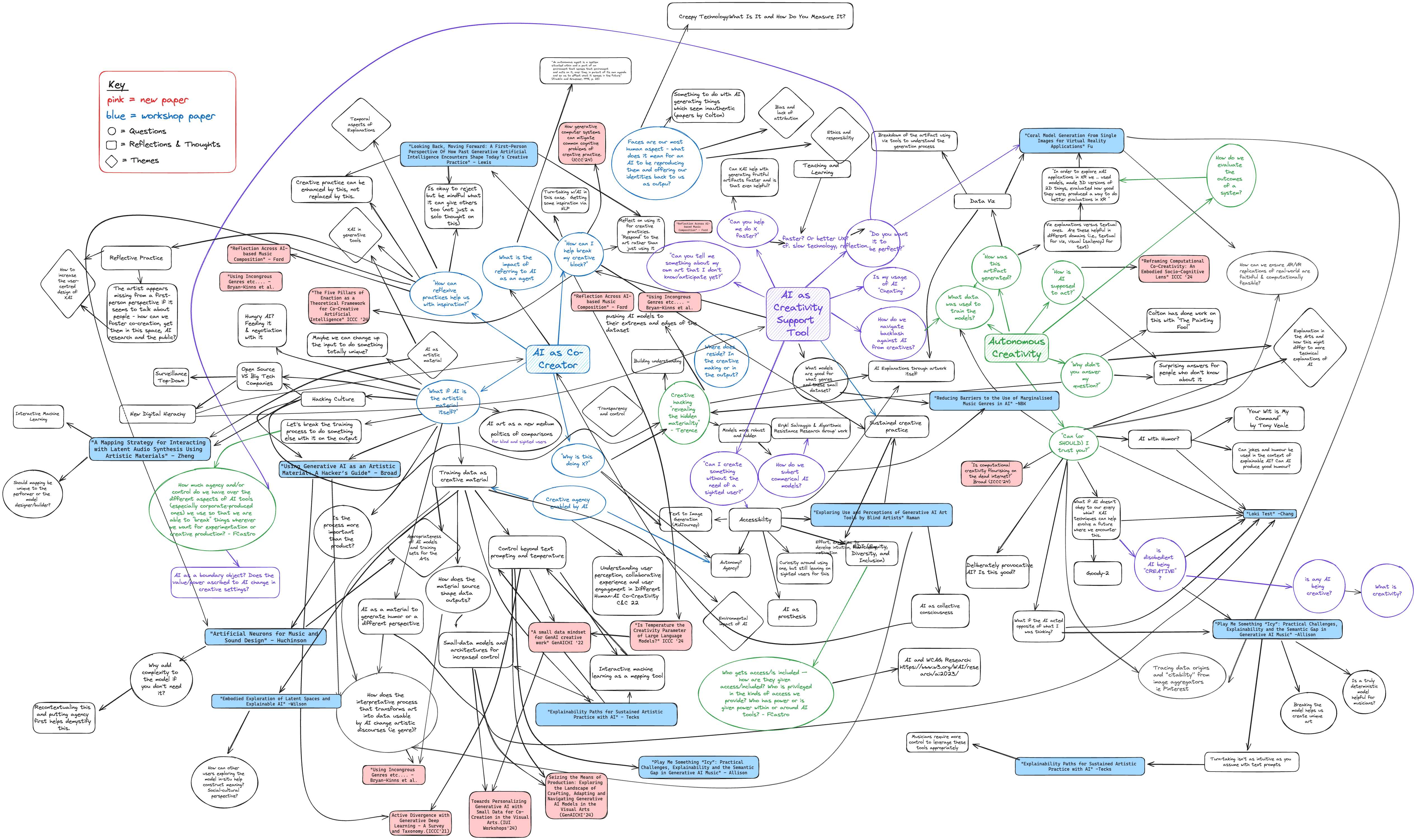

Co-created mindmap from the workshop

Co-created mindmap from the workshop exploring the themes that lead to the creation of the XAIxArts Manifesto. High Resolution and Interactive Mindmap: https://app.excalidraw.com/l/8sDmlvduhSt/6OVLf5GFL7f

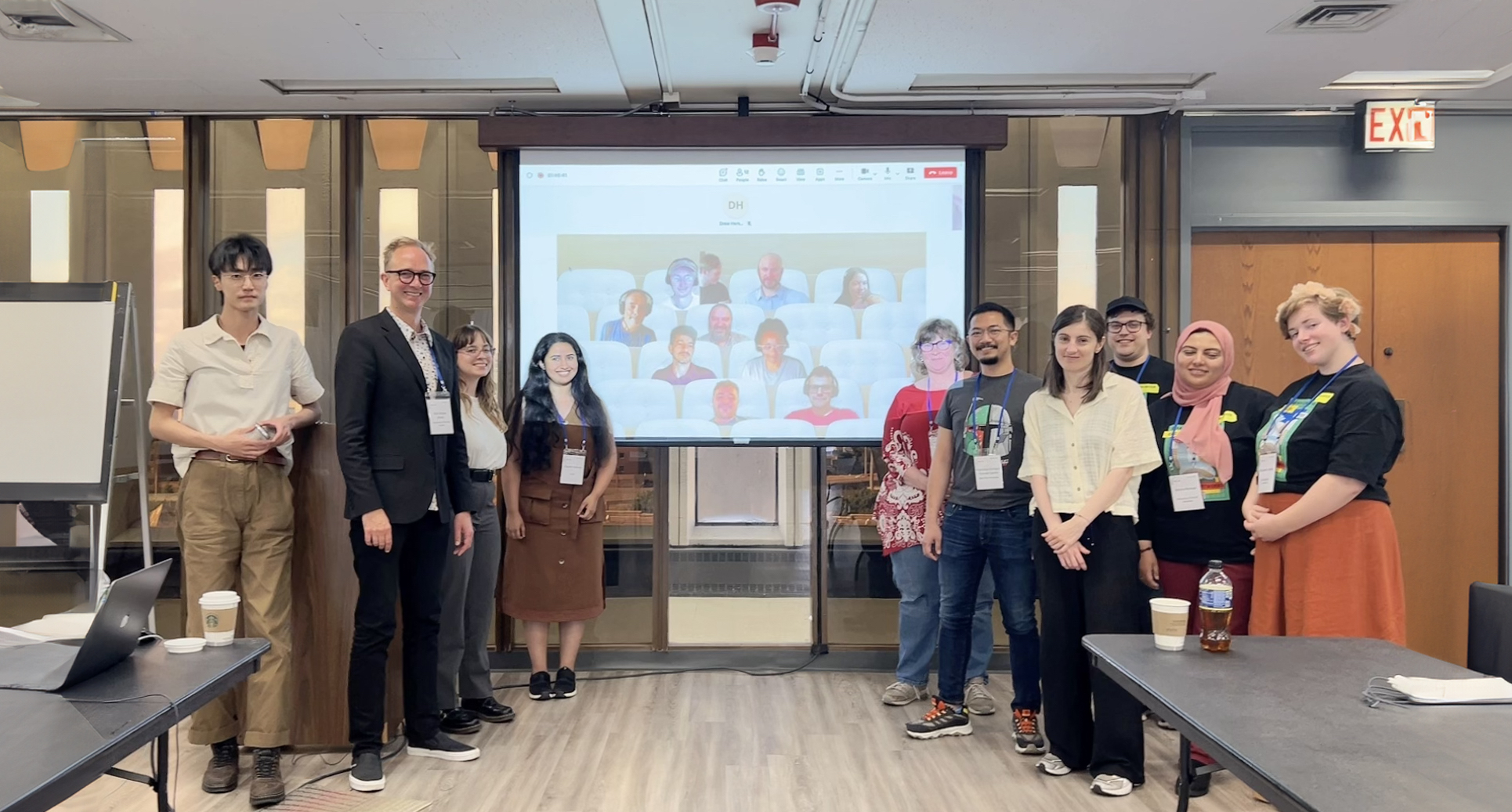

Group photograph from the workshop

Group photograph after the completion of the first draft of the XAIxArts Manifesto. This photo was taken by Jie Fu.

Themes

This workshop will explore how XAI might be used in the Arts and how the Arts might contribute to new forms of XAI. It will examine the challenges and opportunities at the intersection of XAI and the Arts (XAIxArts), offering a fresh and critical view on the explainable aspects of Responsible AI and Human-Centred AI more broadly. The themes include but are not limited to:

Temporal Aspects of Explanations

Moment-by-moment feedback is essential for the creative process in Arts domains from music to sketching. For example, exploring real-time AI audio generation that requires immediate feedback and audio generation whilst navigating an AI model. Importantly for XAIxArts in-the-moment explanations differ in style from the post-hoc explanations typical of broader XAI research. The role of time itself may also be different in XAI for the Arts compared to broader XAI approaches. For example, creative practice unfolds over seconds, days, or even years. This contrasts single-shot use of XAI more broadly where an explanation might be used to understand an AI’s decision and is then discarded. Additionally, AI explanations should consider the phase of the creative process, as it influences user preferences for AI contributions.

Tailored Explanations

Explanations need to be tailored to individual users based on their skills, experience, and context, as there can be no single appropriate explanation. For example, tailoring explanations to an individual’s AI literacy and abilities. Moreover, in the Arts we may need to tailor explanations to audiences and even bystanders.

AI as Material

The AI model itself may be an artwork. In XAIxArts, traversing an AI model may be both an artwork and a form of explanation. For example, artists navigating a generative AI model to expose its bias or navigating a model’s latent space to explain its limits. Or creating AI artworks that are experienced by and even navigated by audiences as a form of explanation. Indeed, current XAI could be augmented using creative methods from the Arts, interaction design and HCI to address XAI’s current focus on technical and functional explanations. These are artist-led rather than model-led or technologist-driven explanations of AI models in the Arts. However, they raise questions of who or what might be responsible for an explanation if it relies on sense-makings of the art itself - is it the AI model, the artist, or the audience? And, how do we use Arts practices to inform the design of more intuitive, holistic, useful or engaging ways in which the decisions and actions of AI can be made more understandable to people?

XAI in Generative Tools

Current AI generative tools provide surprisingly little explanation of how they work, how artistic requirements might be specified or explored, nor how their use impacts the environment through energy consumption. Furthermore, in the artistic use of AI Generated Content (AIGC), we might want to actively search out and recreate errors or glitches in AI models as part of creative practice rather than removing them as might be conventionally undertaken with XAI. Here, XAI approaches such as contrastive examples or conversational interfaces might offer greater opportunities for trial-and-error creative practice with AI. Thinking further down the AI-artist journey, XAI may offer opportunities for artistic practice with AIGC to be recorded, revisited, and reinterpreted as a form of reflective practice with AI. This explanation of practice might also offer windows into the creative mind of the artist for the audience and consumers of their AIGC works.

Ethics and Responsibility

Responsible AI is a key research field that XAI contributes to, especially in terms of explaining and understanding the ethics of AIGC. A concern for XAIxArts is how we might both support and disrupt trust in AI in the Arts. XAI could take a proactive role in explaining the provenance and attribution of AIGC and its biases. However, care needs to be taken in XAIxArts as the explanation of an AI Artwork may essentially devalue the constructed meaning of the piece.

Proceedings

Proceedings of the workshop are available at https://arxiv.org/abs/2406.14485

Schedule

Sunday, 23rd June, 2024 Workshop Schedule

All times are displayed in workshop time zone (Chicago, IL. UTC -05:00)

09:00 Arrival

09:30 Welcome and ice breaker (15 mins)

09:45 Presentations group A + Q&A (10 min each) (60 mins)

- Gayatri Raman and Erin Brady. Exploring Use and Perceptions of Generative AI Art Tools by Blind Artists [pdf]

- Jie Fu, Shun Fu and Mick Grierson. Coral Model Generation from Single Images for Virtual Reality Applications [pdf] [video]

- Jia-Rey Chang. Loki Test [pdf] [video 1] [video 2]

- Makayla Lewis. Looking Back, Moving Forward: A First-Person Perspective Of How Past Generative Artificial Intelligence Encounters Shape Today’s Creative Practice [pdf]

- Terence Broad. Using Generative AI as an Artistic Material: A Hacker’s Guide [pdf] [video]

10:45 Coffee break (15 mins)

11:00 Brainstorm about XAIxArts themes (30 mins)

11:30 Co-develop XAIxArts manifesto (30 mins)

12:00 Plans for network and community e.g. edited book (30 mins)

12:30 Lunch (90 mins)

14:00 Presentations group B + Q&A (10 min each) (60 mins)

- Shuoyang Zheng, Anna Xambó Sedó and Nick Bryan-Kinns. A Mapping Strategy for Interacting with Latent Audio Synthesis Using Artistic Materials [pdf] [video]

- Simon Hutchinson. Artificial Neurons for Music and Sound Design [pdf] [video]

- Elizabeth Wilson, Deva Scubert, Mika Satomi, Alex McLean and Juan Felipe Amaya Gonzalez. Embodied Exploration of Latent Spaces and Explainable AI [pdf] [video]

- Austin Tecks, Thomas Peschlow and Gabriel Vigliensoni. Explainability Paths for Sustained Artistic Practice with AI [pdf] [video]

- Jesse Allison, Drew Farrar, Treya Nash, Carlos G. Román, Morgan Weeks and Fiona Xue Ju. Play Me Something “Icy”: Practical Challenges, Explainability and the Semantic Gap in Generative AI Music [pdf] [video]

- Nick Bryan-Kinns and Zijin Li. Reducing Barriers to the Use of Marginalised Music Genres in AI [pdf] [video]

15:00 Coffee break (15 mins)

15:15 Brainstorm about XAIxArts themes (15 mins)

15:30 Co-develop XAIxArts manifesto (15 mins)

15:45 Plans for network and community e.g. edited book (15 mins)

16:00 Close

Organizers

- Nick Bryan-Kinns (Chair), University of the Arts London, United Kingdom

- Corey Ford, Queen Mary University of London, United Kingdom

- Shuoyang Zheng, Queen Mary University of London, United Kingdom

- Helen Kennedy, University of Nottingham, United Kingdom

- Alan Chamberlain, University of Nottingham, United Kingdom

- Makayla Lewis, Kingston University, United Kingdom

- Drew Hemment, University of Edinburgh, United Kingdom

- Zijin Li, Central Conservatory of Music, China

- Qiong Wu, Tsinghua University, China

- Lanxi Xiao, Tsinghua University, China

- Gus Xia, MBZUAI, United Arab Emirates

- Jeba Rezwana, Towson University, United States

- Michael Clemens, University of Utah, United States

- Gabriel Vigliensoni, Concordia University, Canada

Acknowledgements

We would like to acknowledge the support of the Engineering and Physical Sciences Research Council - [grant number EP/T022493/1] Horizon: Trusted Data-Driven Products, [grant number EP/Y009800/1] AI UK: Creating an International Ecosystem for Responsible AI Research and Innovation & [grant number EP/V00784X/1] UKRI Trustworthy Autonomous Systems Hub.

Contacts

If you have any questions feel free to contact Nick, Corey or Shuoyang at the following email addresses:

- n.bryankinns@arts.ac.uk

- c.j.ford@qmul.ac.uk

- shuoyang.zheng@qmul.ac.uk