About

This first ACM C&C workshop on explainable AI for the Arts (XAIxArts) brings together a community of researchers in HCI, Interaction Design, AI, explainable AI (XAI), and digital arts to explore the role of XAI for the Arts. XAI is a core concern of Human-Centred AI and relies heavily on Human-Computer Interaction techniques to explore how complex and difficult to understand AI models such as deep learning techniques can be made more understandable to people. However, to date XAI research has primarily focused on work-oriented and task-oriented explanations of AI and there has been little research on XAI for creative domains such as the arts. The workshop will take place online and will question the nature, meaning, and role of the explainability of AI for the Arts. The goals of this online workshop are to:

- Build an interdisciplinary XAIxArts research community and network;

- Map out the current and future possible landscapes of XAIxArts;

- Critically reflect on the potential of XAI for the Arts, forming the basis for future collaborations and publications;

- Develop ideas for an outline proposal for an edited book on XAIxArts.

Workshop day (online): Mon 19th June 2023

Archived Workshop Description

Nick Bryan-Kinns, Corey Ford, Alan Chamberlain, Steven David Benford, Helen Kennedy, Zijin Li, Wu Qiong, Gus G. Xia, and Jeba Rezwana. 2023. Explainable AI for the Arts: XAIxArts. In Proceedings of the 15th Conference on Creativity and Cognition (C&C ‘23). Association for Computing Machinery, New York, NY, USA, 1–7. https://doi.org/10.1145/3591196.3593517

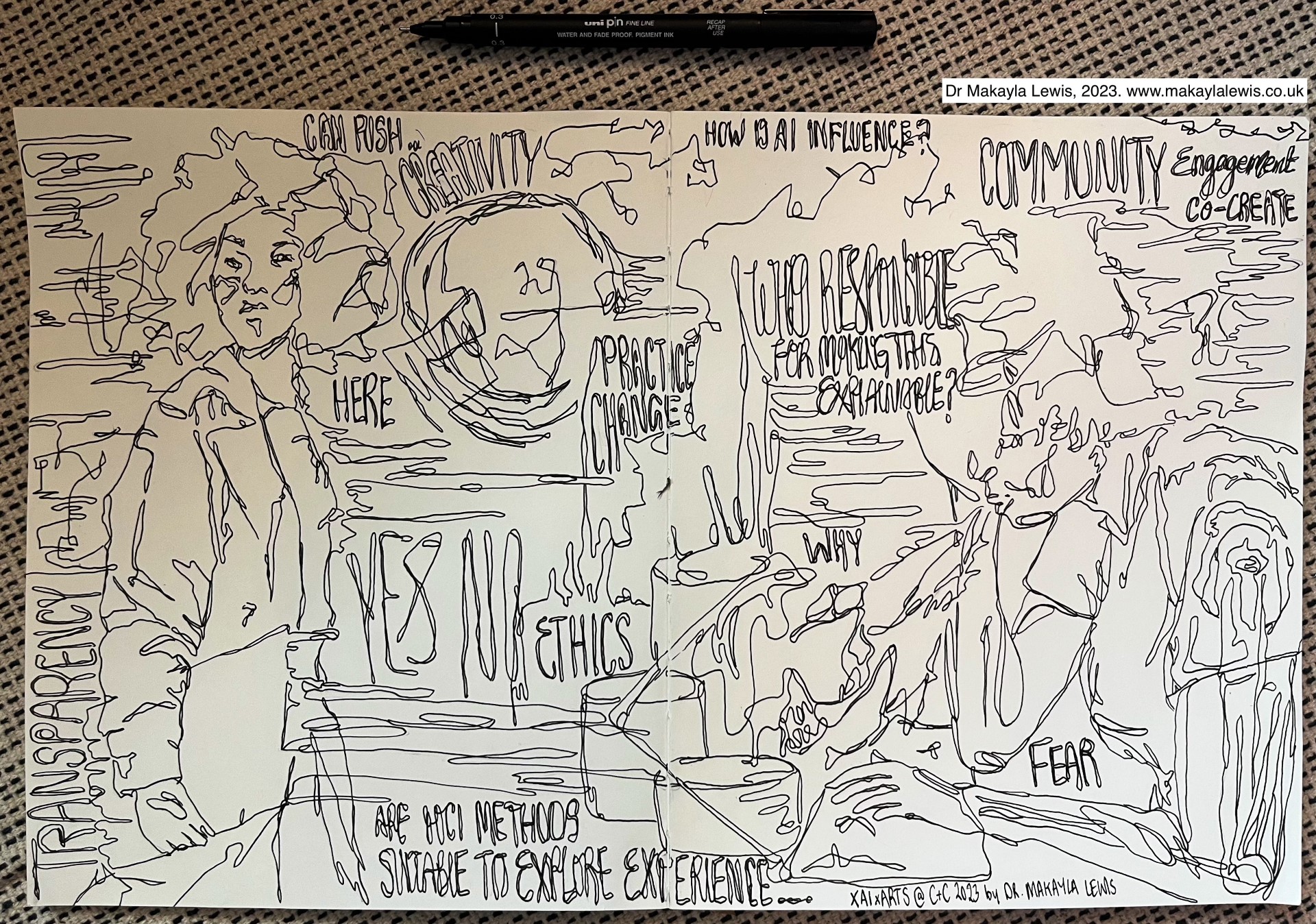

Visual Reflection from the Workshop

Attribution: Dr Makayla Lewis, 2023. All Rights Reserved. Website: www.makaylalewis.co.uk

Themes

The themes include but are not limited to:

The Nature of Explanations

There are open questions about how much explanation is needed or even useful in XAIxArts. What does it mean to actually understand an AI in a creative context i.e. how much explanation is useful versus the risk of over-explaining, and how can we design for both explanation and serendipitous interaction? How do we strike a balance between explanation, exploration, and surprise? Being surprised, confused, and reflective are often integral to creative practice but are in opposition to the more functional goals of XAI.

AI Models, Features, and Training Data

There are many generative AI models which create content across the arts from visual arts to music. However, there is very little research on how these AI models are actually used and appropriated in creative practice. There are open questions about: What kinds of AI models are most amenable to XAIxArts for different artistic domains such as music or visual arts; Which XAI models are more amenable for different artistic processes; Which features of AI models offer the most useful explanations for different creative practices; and What kinds of training sets are more or less useful for creating XAIxArts?

User Centred Design of XAIxArts

A fundamental concern of XAI research is how AI can be made more understandable to humans. There is little research on how User Centred Design (UCD) can be used in the creation of XAI systems, and no current research on UCD for XAIxArts. There are open questions about how artists and creative practitioners can be involved in the co-design of generative AI systems involving data scientists and interaction designers, for example, how to include musicians in the design of XAI generative music systems.

Interaction Design

As XAIxArts is a nascent research area with no XAI models currently being used in practice there is little research on how to design the interaction with XAI for creative practice. This leaves open Interaction Design questions including: Which features of the AI model to expose to the user; How to visualise highly multi-dimensional data typical of AI models e.g. How to visualise latent spaces with 4 or more semantic dimensions; How to navigate and explore the spaces inside AI models; How to handle the entanglement between dimensions within a latent space; How to manage interaction with XAI in combination with the temporal and affective dimensions of artistic practice.

Schedule

Monday 19 June 2023 Workshop Schedule (times in UK time: UTC + 1)

09:00 Welcome & organiser introductions (30mins)

09:30 7 x Presentations & demos & Q&A A (60 mins)

10:30 Break (30 mins)

11:00 Initial Landscaping/ Themes/ Community building (60 mins)

12:00 Lunch break (60 mins)

13:00 Joint Landscaping/ Themes/ Community building (60 mins)

14:00 7 x Presentations & demos & Q&A B (60 mins)

15:00 Break (30 mins)

15:30 Revise Landscaping/ Themes/ Community building (60 mins)

16:30 Wrap up & next steps

16:45 Close

Accepted Submissions

Proceedings of the Workshop are available at arXiv:2310.06428

arXiv:2308.08576 [pdf] Jamal Knight, Andrew Johnston and Adam Berry. (2023). Artistic control over the glitch in AI-generated motion capture. [video]

arXiv:2308.09877 [pdf] Hanjie Yu, Yan Dong and Qiong Wu. (2023). User-centric AIGC products: Explainable Artificial Intelligence and AIGC products. [video]

arXiv:2309.06227 [pdf] Cheshta Arora and Debarun Sarkar. (2023). The Injunction of XAIxArt: Moving beyond explanation to sense-making.

arXiv:2309.07028 [pdf] Marianne Bossema, Rob Saunders and Somaya Ben Allouch. (2023). Human-Machine Co-Creativity with Older Adults - A Learning Community to Study Explainable Dialogues. [video]

arXiv:2309.14877 [pdf] Petra Jääskeläinen. (2023). Explainable Sustainability for AI in the Arts. [video]

arXiv:2306.02327 [pdf] Drew Hemment, Matjaz Vidmar, Daga Panas, Dave Murray-Rust, Vaishak Belle and Ruth Aylett. (2023). Agency and legibility for artists through Experiential AI. [video]

arXiv:2308.11424 [pdf] Makayla Lewis. (2023). AIxArtist: A First-Person Tale of Interacting with Artificial Intelligence to Escape Creative Block.

arXiv:2309.12345 [pdf] Luís Arandas, Mick Grierson and Miguel Carvalhais. (2023). Antagonising explanation and revealing bias directly through sequencing and multimodal inference. [video]

arXiv:2309.04491 [pdf] Nicola Privato and Jack Armitage. (2023). A Context-Sensitive Approach to XAI in Music Performance. [video]

arXiv:2308.06089 [pdf] Ashley Noel-Hirst and Nick Bryan-Kinns. (2023). An Autoethnographic Exploration of XAI in Algorithmic Composition. [video]

arXiv:2308.09586 [pdf] Michael Clemens. (2023). Explaining the Arts: Toward a Framework for Matching Creative Tasks with Appropriate Explanation Mediums. [video]

Note: following submissions are available via other online services:

Lanxi Xiao, Weikai Yang, Haoze Wang, Shixia Liu and Qiong Wu. (2023). Why AI Fails: Parallax. [pdf]

Lanxi Xiao, Weikai Yang, Haoze Wang, Shixia Liu and Qiong Wu. (2023). Why AI Fails: Shortcut. [video]

Gabriel Vigliensoni and Rebecca Fiebrink. (2023). Interacting with neural audio synthesis models through interactive machine learning. [pdf] [video]

Organizers

- Nick Bryan-Kinns, Queen Mary University of London, United Kingdom

- Corey Ford, Queen Mary University of London, United Kingdom

- Alan Chamberlain, University of Nottingham, United Kingdom

- Steve Benford, University of Nottingham, United Kingdom

- Helen Kennedy, University of Nottingham, United Kingdom

- Zijin Li, Central Conservatory of Music, China

- Wu Qiong, Tsinghua University, China

- Gus Xia, NYU Shanghai, China

- Jeba Rezwana, University of North Carolina at Charlotte, USA

Acknowledgements

We would like to acknowledge the support of the Engineering and Physical Sciences Research Council [grant number EP/S035362/1] PETRAS 2 & [grant number EP/V00784X/1] UKRI Trustworthy Autonomous Systems Hub. We also acknowledge support from the UKRI Centre for Doctoral Training in Artificial Intelligence and Music, supported by UK Research and Innovation [grant number EP/S022694/1].

Contacts

If you have any questions feel free to contact Nick or Corey at the following email addresses:

- n.bryankinns@arts.ac.uk

- c.j.ford@qmul.ac.uk